To ensure a good user experience on your website – which includes how easy it is for a user to navigate your pages and how much they trust the information they find there – here are our favourite SEO and usability audit checks for optimal performance.

Auditing checklist

The following tips will help give you a global understanding of your website’s SEO.

Make your site mobile-friendly

Nowadays, most searches come from mobiles, which is why Google is now prioritizing mobile-first indexing. If you have a blog, check if the host automatically adapts your page for mobiles. You can check your website using the Google Chrome developer tools plugin and check how your page responds on a mobile device on a page per page basis.

Alternatively, Hexometer.com can be deployed across your entire website monitoring all your pages for mobile issues that can affect user experience and conversion rates on a desktop, mobile or tablet device.

Accessibility

If you nobody can access your site, then SEO is a moot point. After all, an inaccessible site is an invisible site. One issue that may affect this accessibility is the use of robots.txt.file, which is a useful file which can block crawlers from accessing different parts of your site. However, this file can also, by mistake, block all crawlers from accessing all areas of your site. You can manually check to see if this is the case with your website.

In the same vein, you can also manually check if the robots meta tags are blocking crawlers. If they are, this will disrupt crawlers indexing your pages and following your links.

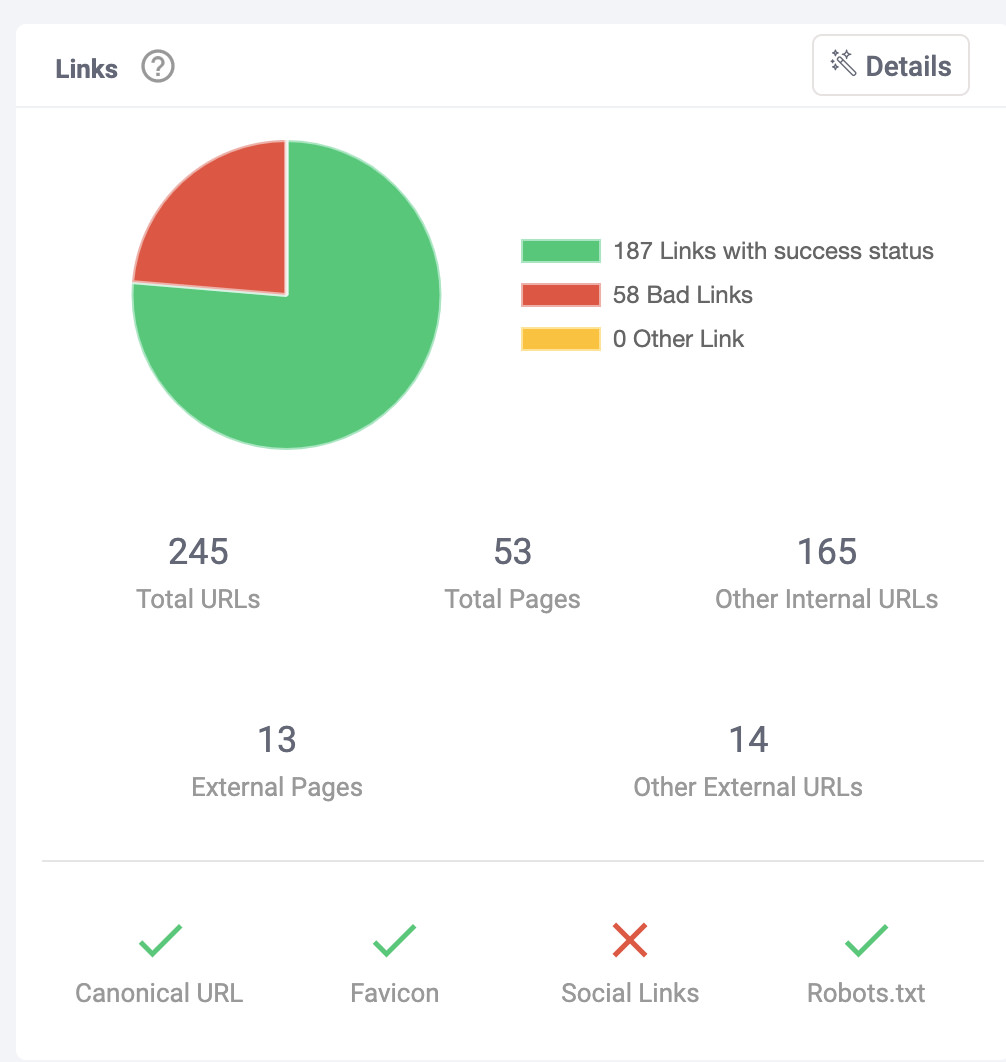

Of course, accessibility is also hindered if URLs are broken, so identify these kinds of URLs and then you can either fix them or redirect them to a new page that has similar content. This manual audit will also give you a chance to evaluate your redirection strategies, and this could be another area of improvement.

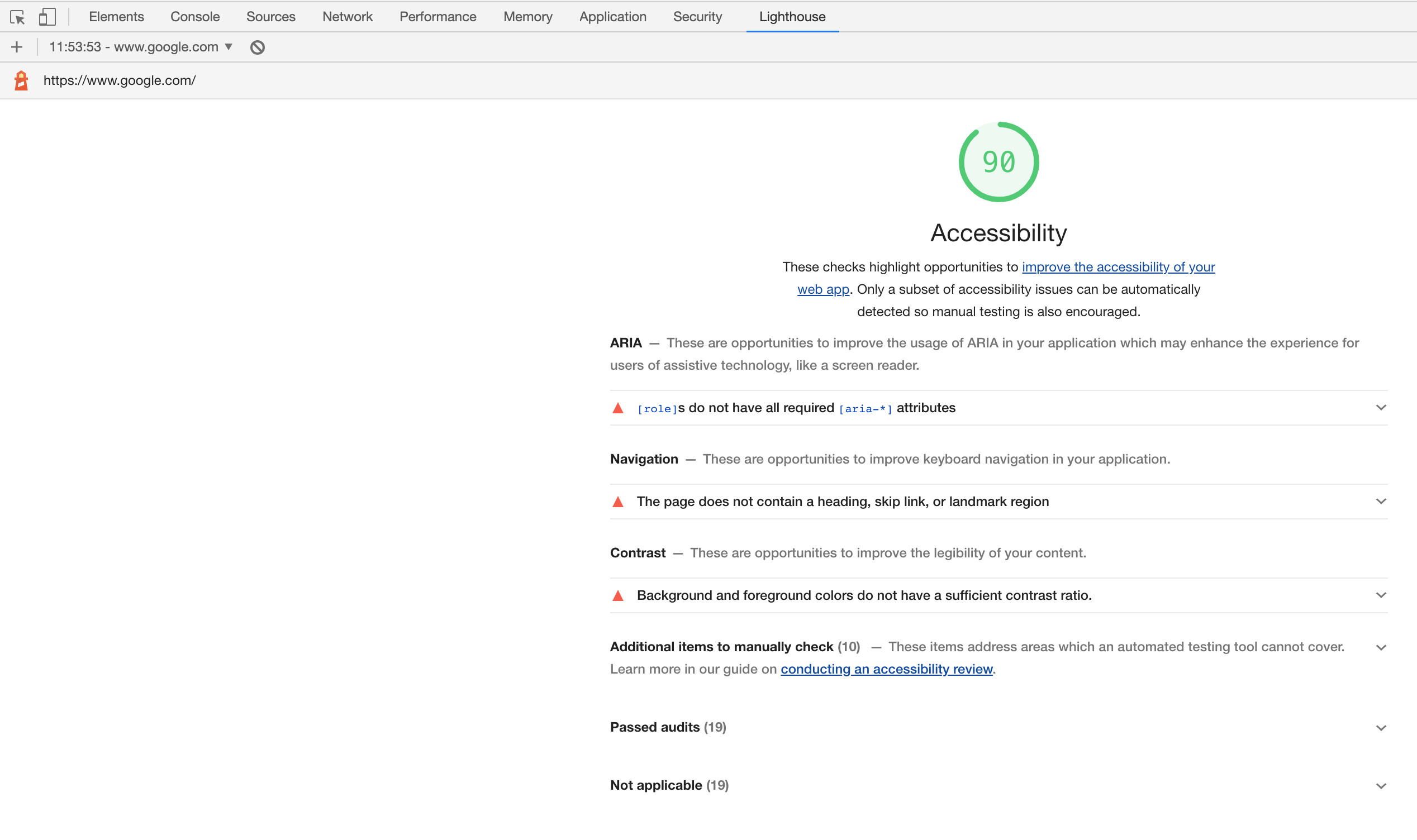

Google offers an excellent plugin within Google Chrome called Lighthouse, available from the developers tools section where you can check any page for accessibility issues.

Finally, you can take a deep dive into your sitemap. If your sitemap is a solid XML document, it should be crawled correctly. If it is not in the correct format, this could affect the quality of the crawl. In addition, if you find a page in the crawl that is not in the sitemap, then this is a clear indication that you must update your sitemap. Conversely, if there is something in the sitemap which does not appear in the crawl, it’s an orphaned page. Build an internal link to it and make it part of the site’s architecture.

Hexometer monitors your website accessibility 24.7 alerting you when pages need your attention for optimal user experience.

Site Architecture

The site architecture is another factor we can analyse when it comes to auditing accessibility. Simply put, this is the vertical depth and horizontal breadth of your website. The best scenario is to have a flat structure, one which optimizes the verticality and horizontality of your site. To assess the flatness of your structure, monitor how many clicks it takes to travel from the homepage to other important pages. Furthermore, check how well these pages link to one another in the architecture, and the most important pages should be prioritized in the architecture.

The integrity of the architecture can be compromised by Flash and JavaScript navigation, particularly because both can be inaccessible to search engines. Do crawls with these navigational elements activated and disabled, and then you can compare the results.

Another good idea is to see if Schema.org Structured Data has been implemented across your website. Implementing this makes it more likely that your site will appear in Google’s Featured Rich Snippets, which appears at the top of some searches.

For a simpler approach, you can deploy Hexometer and it will automatically crawl your entire website to look for HTML and architecture issues 24/7.

Audit your Google Analytics setup

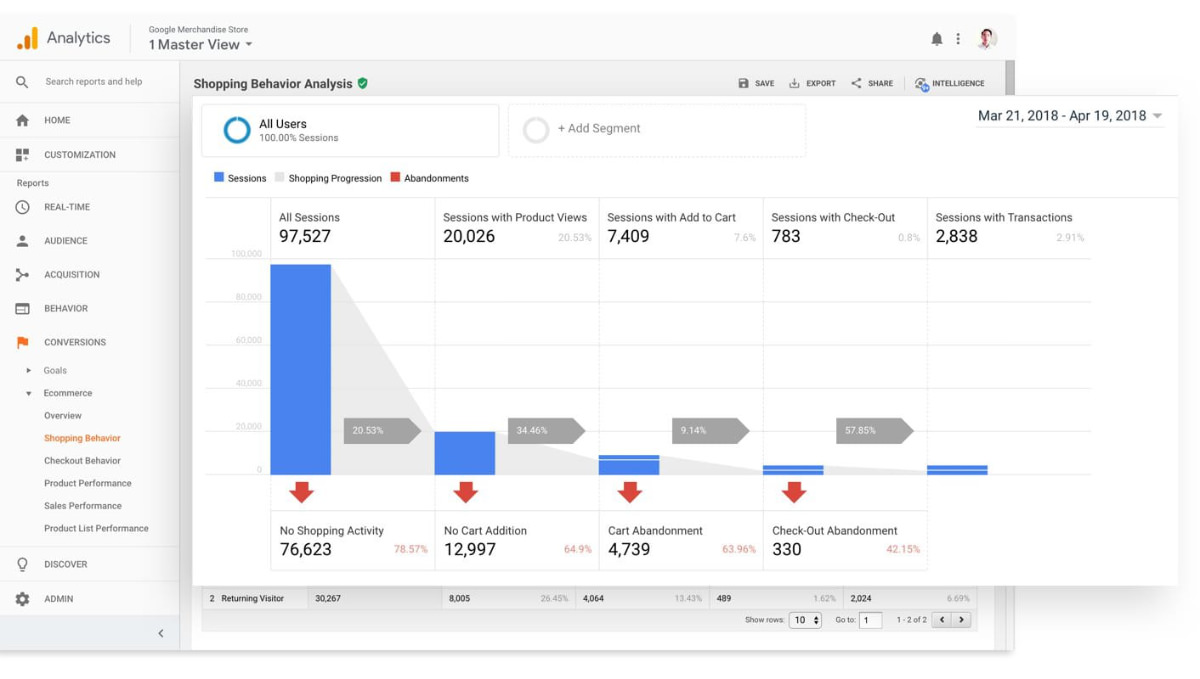

In terms of SEO, it’s important you set up your Google Analytics properly. The first step could be moving the tracking tool from this platform into Google Tag Manager. Then you can easily see how users are interacting with your content, such as if a user has completed each stage of the registration process, or when they abandoned it.

Monitor trends in Google Analytics

You can take a look at your organic traffic with Google Analytics. Once on the platform, go to Acquisition, All Traffic, and then Channels. In Channels, you can perform an Organic Search, and you can apply different filters and timescales.

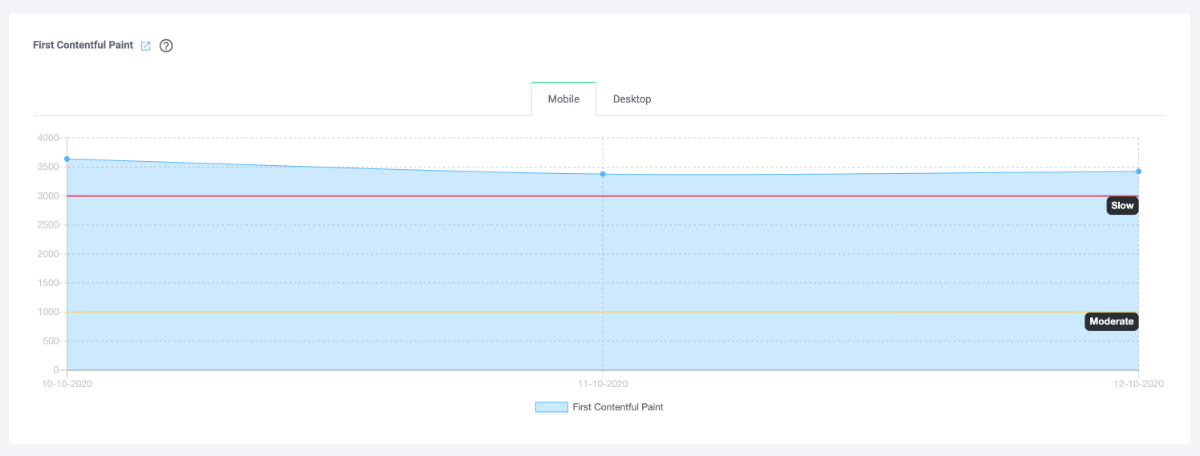

Make your site faster

It’s not rocket science to realize that the faster a website loads, the better. Even a delay of a few seconds can test the patience of a user, and they are unlikely to wait around or even return. It’s pretty unforgiving, so make sure your load speeds are optimal by cleaning up the HTML code. In addition, you can also crunch your images so that they don’t take up as much space. Run speed tests to stay on top of this issue.

Hexometer.com can help you identify slow loading pages and performance issues around the clock.

Get rid of Zombie pages

Getting rid of dead pages can streamline your site, which benefits the user experience and the SEO audit. Consider getting rid of unnecessary pages, such as old press releases, thin content, boilerplate content, and archive pages. If you have a blog, you can evaluate whether you should get rid of category or tag pages or not.

This is also the time where you should pick up on broken links, 404 pages and server errors. Unfortunately doing this manually is quite time consuming, so we recommend an automated tool such as Hexometer that can check this on a daily or weekly basis to pick up on these issues as soon as they happen.

Review and improve your content

Your content should always have at least 300 words. Anything less, and a search engine will know that you are not saying anything important. Which indicates, also, that we should always be aware that our content should serve our users in some way. If they find the information useful, they will stay longer on the site, which will improve your bounce rate. Useful content is not spammy content, and a post that comes overstuffed with keywords will annoy the user because it’ll be unreadable, and this won’t go unnoticed by a crawler. As a general rule of thumb, be a good writer, use short introductions and have short paragraphs. People have short attention spans these days.

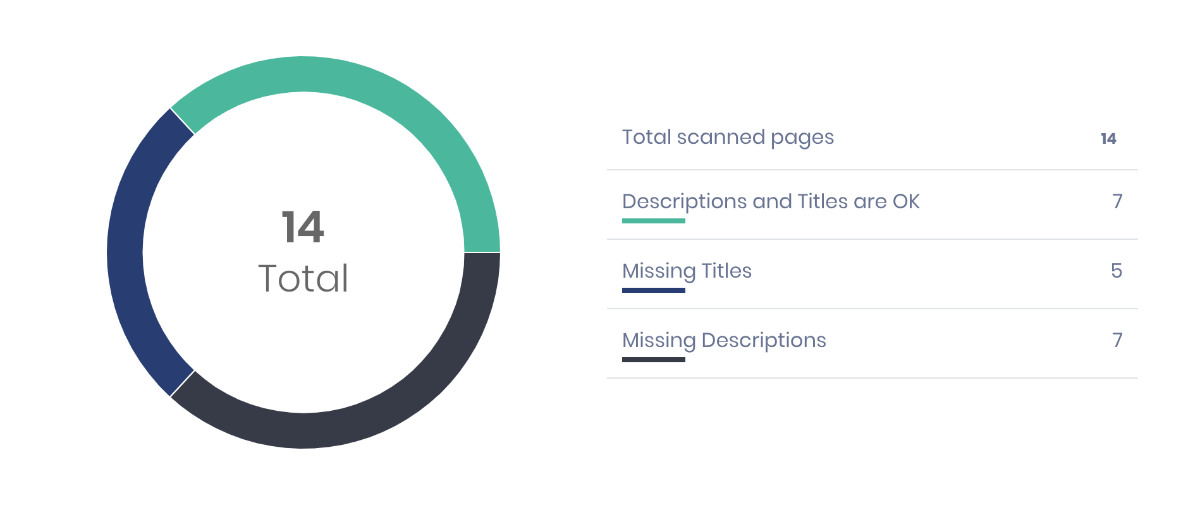

On a technical level, you can audit your content by looking at the information architecture, which essentially means each page has a purpose; keyword cannibalism, which happens when multiple pages target the same keyword, and this is negative; HTML markup; titles; tags; images; and, last but not least, external links. These are all an important part of the SEO toolkit.

When it comes to titles, we need to consider if the length is good (not too short or too long), whether it has any keywords, and if it accurately describes the content its related to. By the same token, the effective use of meta descriptions and tags will indicate what the content is about, which should result in a greater click-through-rate. Using H1 tags etc. will make a post easier to crawl and easier to read for the user. Regarding images, we can put alt-image tags in order to tell search engines what the image is related to. Otherwise, the search engine would not be able to crawl it. Finally, make sure you are linking to quality content and the anchor text that contains the link fits with the subject of that link. It goes without saying that broken links are an issue that should be resolved and that the whole purpose of these factors is to create a frictionless experience for the user and to boost your website’s SEO.

Utilize internal links more

To make the most out of internal links, make sure they are linking to the best performing content on your site.

Improve on-page SEO

Auditing this part means analysing different elements of your site.

Firstly, are your URLs short and user friendly? Generally speaking, they should be less than 115 characters. In addition, they should have relevant keywords. And if you want to keep search engines happy, make sure that they are using subfolders rather than subdomains – the latter are seen as unique domains. It’s also a good idea to use static URLs, but if you must use parameters, don’t use them too much. On a related note, use hyphens rather than underscores in order to be more SEO friendly.

URLs can also be behind duplicated content, and if two different URLs are taking people to the same page, a search engine will think two separate pages exist.

In summary, you should also be following best practices for your URLs.

For a comprehensive audit of your on page SEO, be sure to check out Hexometer which can analyze all your pages on a daily or weekly basis for SEO issues that can affect your search performance.

Off-page ranking factors

When we consider this metric, we are talking about things such as popularity and trustworthiness. Popularity is really about traffic, and a search engine takes a look at how much traffic you get, and how it compares with other sites. They will also check to see if quality sites are backlinking to your webpage. Trustworthiness, on the other hand, can be subjective, but a site is not be trusted if it engages in Blackhat SEO and if it contains malware. And another item on the checklist is evaluating the trustworthiness of neighbouring sites.

Social engagements and mentions will also improve these two abstract notions. With more visibility and buzz, users are more likely to check out your site.

Monitor indexing issues

Use a site command to discover how many pages of your website are being indexed by a given search engine. Bear in mind that the numbers you see are probably not accurate, but they can still be useful when you know how many pages are on your site from a crawl and the site map. The best situation is when both sets of figures are more or less the same. If the index count is smaller than the actual count, then there are parts of your site that are not being indexed. If the index count is significantly larger than the actual count, then your site probably has duplicate content. Fortunately, you can manually check for this type of content.

We also need to be sure that your most important pages are being indexed. If these pages do not appear in a crawl, check to see if they are accessible, or even if they have been penalized. If you are unsure of whether you have been penalized or not, don’t worry, it is usually quite obvious, either because your pages are deindexed but accessible, or because you have received a penalization message. In this scenario, you must identify and fix the mistake. Once that is done, request a reconsideration from the search engine.

A simple web search for your company will show if your company’s website is top of the rankings. If it is, then you can relax.

Make sure search engines are indexing one version of your site

Believe it or not, several different versions of your site can be indexed, and the search engine will view them as separate entities. Fortunately, this can be easily fixed. You can redirect the alternate versions to the real URL, and in some cases, you can use a 301 redirect so that users go where you want them to.

Take a look at user experience

You need to optimize your content for UX Signals, as this is an indicator of user happiness. And when the people that visit your site are happy, search engines will give you a better ranking. If you are scoring poorly on this metric, it could just a simple matter of adding better information to your content and generally making it more relevant. In other situations, you might have to declutter the screen and ensure that the page design is not filled with too many boxes or with irrelevant content.

Check keyword rankings

Keywords are a valuable currency when it comes to SEO. If you are trying to drive traffic, you need to perfect keywords to get noticed and it has to toe the line of being popular but not overused. Your blog post may be a work of art, but you can use precise data to make it is SEO ready.

Here’s a shortcut

If you don’t have the time to manually audit your site, then you can use a tool that does all of this for you automatically. Hexometer is a sophisticated AI platform which works 24/7 to check how different pages on your website are performing, and these tests are done all over the world and on many different devices. This tool monitors your website, page speed, plugins, broken links, user experience, security, and it will analyse your landing pages.

So, if you are business selling internationally, issues with a particular mobile device or geography will be flagged by Hexometer. You can be notified of any issues – for your convenience, you can set the frequency of these alerts, and you can choose to share them with particular teams in your office – which means that they can be addressed as quickly as possible, saving you both precious time and money. It’s pretty easy to set up, too, and you won’t have to change any code in your website.

CMO & Co-founder

Helping entrepreneurs automate and scale via growth hacking strategies.

Follow me on Twitter for life behind the scenes and my best learnings in the world of SaaS.